Accelerating data analytics is crucial for storage vendors offering systems designed for big data analytics, artificial intelligence (AI), and machine learning (ML). Speed is a key factor in AI workloads, and flash storage provides a significant performance advantage throughout the AI data pipeline. However, when using an all-flash array (AFA), software latency can become a bottleneck.

FlexSDS has developed a revolutionary architecture that eliminates this bottleneck with a kernel-bypass, zero-copy, and lock-free model for scale-out storage. By bypassing the operating system, it significantly reduces latency, delivering a 10X+ performance boost compared to traditional storage software. This approach not only brings performance closer to hardware limits but also allows seamless scalability by adding more hardware resources.

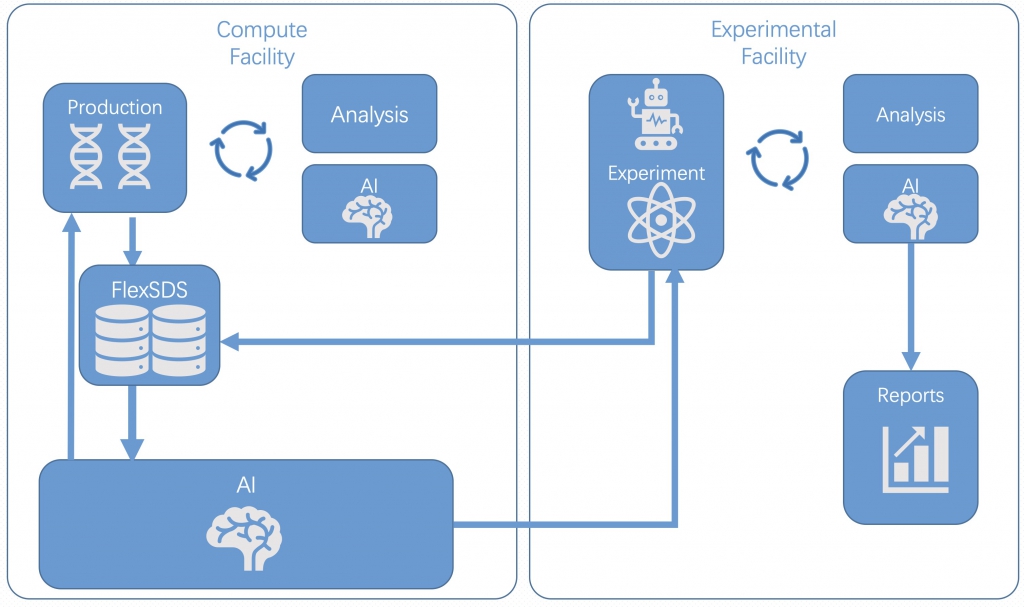

FlexSDS is a software-only solution that runs on industry-standard x86 hardware, offering unlimited capacity per node and supporting storage pools of up to 2 PB each. It can be deployed as external storage, HCI storage, or within private and hybrid cloud environments, delivering high-performance storage services for AI/ML workloads.

FlexSDS provides both block storage and a distributed file system (DFS):

Block Storage: Supports thin provisioning, log-structured volumes, and RAW volumes, exporting them over SAN protocols.

Distributed File System (DFS): Supports NFS and a native API, optimized for AI/ML workloads.

Designed for AI/ML and Big Data environments, FlexSDS enables organizations to run applications at any scale, achieve faster time-to-value, and scale non-disruptively, whether scaling up or out.

FlexSDS Key Features for AI & Big Data

- High-Performance Distributed File System: Designed for AI/ML workloads, delivering parallel, high-speed data access.

- Advanced Hardware Support: Supports NVMe SSDs (Kernel-Bypass), RDMA (InfiniBand, RoCE, iWarp), and fully optimized for all-flash arrays.

- Fast Deployment & Scalability: Enables rapid storage provisioning, dynamic workload scaling, and intelligent load balancing.

- Next-Gen Architecture: Built for modern hardware with kernel-bypass, lock-free design, zero-memory-copy, and high CPU cache optimization for ultra-low latency.

- Parallel & Concurrent I/O: Maximizes storage and network resource utilization for high throughput and low-latency performance.

- Data Protection & High Availability:

- Multi-node data replication and erasure coding (EC) for fault tolerance.

- Automated failover and self-healing mechanisms.

- Efficient asynchronous replication for disaster recovery.

- Cost Efficiency: Reduces Total Cost of Ownership (TCO) with efficient resource utilization.

- Simplified Management: Single-pane-of-glass management for seamless administration.

- Massive Scalability: Supports up to 1,024 server nodes, offering both block storage and DFS services.

- Comprehensive Protocol Support: iSCSI, iSER, NVMe-over-Fabrics, NFS, and native APIs.

- Advanced Snapshot & Replication:

- Unlimited space-optimized snapshots and clones.

- Periodic or continuous asynchronous long-distance replication.